Goal: Maximize QxK^T kernel throughput on H200

Logs: runs/3ce9ab3e-opt-gpu-matmul

Model: o4-mini

Tokens: ↑91.2K ↓74.8K = 166.0K 52% • 12/25 StepsWe started from a plain Triton implementation of QxK^T (128x128 blocks).

The profiler showed the kernel was memory‑bound. To hide DRAM latency we:

* Added double‑buffered shared‑memory tiles so global loads overlap math.

* Switched to 32x128x32 tiling to cut register pressure.

* Hoisted the K‑pointer update outside the loop.

Each change was kept only if it delivered >5% speed‑up.🌳 baseline 1.00×

└─● attempt

├─● attempt

│ └─● attempt

├─● tile32 0.45×

├─● reg_prune 0.62×

└─● attempt

├─● dbuf 0.87×

└─● attempt

├─● attempt

├─● prefetch 1.10×

├─● fusion 1.57× 🏆

└─○ evaluating

1import triton, triton.language as tl

2

3@triton.autotune(

4 configs=[tl.Config({"BLOCK_M": 128, "BLOCK_N": 128, "BLOCK_K": 64}, num_warps=4, num_stages=2)],

5 key=["M", "N", "K_dim"],

6)

7@triton.jit

8def qk_kernel_naive(Q_ptr, K_ptr, Out_ptr, M, N, K_dim):

9 pid = tl.program_id(axis=0)

10 m = pid // tl.cdiv(N, 128)

11 n = pid % tl.cdiv(N, 128)

12 offs_m = m*128 + tl.arange(0, 128)

13 offs_n = n*128 + tl.arange(0, 128)

14 offs_k = tl.arange(0, 64)

15 acc = tl.zeros((128, 128), dtype=tl.float32)

16 for k in range(0, K_dim, 64):

17 q = tl.load(Q_ptr + (offs_m[:, None]*K_dim + (k+offs_k)[None, :]))

18 kT = tl.load(K_ptr + (offs_n[:, None]*K_dim + (k+offs_k)[None, :]))

19 acc += tl.dot(q, tl.trans(kT))

20 tl.store(Out_ptr + offs_m[:, None]*N + offs_n[None, :], acc) 1import triton, triton.language as tl

2

3@triton.autotune(

4 configs=[

5 tl.Config({"BLOCK_M": 128, "BLOCK_N": 64, "BLOCK_K": 32}, num_warps=4, num_stages=4),

6 tl.Config({"BLOCK_M": 64, "BLOCK_N": 128, "BLOCK_K": 32}, num_warps=4, num_stages=4),

7 ],

8 key=["M", "N", "K_dim"],

9)

10@triton.jit

11def qk_kernel_opt(Q_ptr, K_ptr, Out_ptr, M, N, K_dim):

12 pid = tl.program_id(axis=0)

13 m = pid // tl.cdiv(N, 64)

14 n = pid % tl.cdiv(N, 64)

15 offs_m = m*128 + tl.arange(0, 128)

16 offs_n = n*64 + tl.arange(0, 64)

17 acc = tl.zeros((128, 64), dtype=tl.float32)

18 Q_ptrs = Q_ptr + offs_m[:, None]*K_dim

19 K_ptrs = K_ptr + offs_n[None, :]*K_dim

20 for k in range(0, K_dim, 32):

21 q = tl.load(Q_ptrs + k)

22 kblk = tl.load(K_ptrs + k)

23 acc += tl.dot(q, tl.trans(kblk))

24 tl.store(Out_ptr + offs_m[:, None]*N + offs_n[None, :], acc)>>> benchmarking qk_kernel_naive (step 14)

warm‑up................. ok

collecting 100 timing samples

[25/100] median 77.4 µs 4.34 TFLOPs

[50/100] median 75.9 µs 4.42 TFLOPs

[75/100] median 75.6 µs 4.44 TFLOPs

[100/100] median 75.3 µs 4.46 TFLOPs

device : NVIDIA A100‑80GB

batch : 4096 seq_len : 2048Academia and Industry Recognition

Weco's innovative approach is featured in leading research papers and industry publications

Let Your Code Evolve to its Alpha

Weco solves bugs, performance issues, and slow iterations so you can focus on pushing your metrics and ML pipelines to the max:

Deployable Breakthroughs on Autopilot

Ship measurable wins faster

Weco proposes improvements, fixes bugs, and tests changes - no back-and-forth.

Spend less to improve more

Sweep hundreds of candidates for just a few dollars, catching the non-obvious wins.

Keep data private by default

Your eval code runs on your own machine where your data lives; only outputs come back.

Work with any language

Python, C++, Rust, JS, etc - if it can print a metric to the terminal (e.g. speed: 1.7), Weco works with it.

Learn more per run

Skip vibe-coding one tweak at a time. Run Weco and get a clear map of wins and misses.

Guide your search

Use written constraints, combining your intuition with automated sweep power.

It's as Simple as:

1. Point Weco to your eval

Provide a command that prints your metric value to stdout, which will be used to...

2. Run the Weco optimization

Weco proposes code edits, runs local eval, and evolves solutions based on findings.

3. See and ship breakthroughs

Watch progress locally or in the dashboard and see results before merging the winner.

Get Up and Running in Minutes

Our average onboarding time is under 10 minutes. Simply point Weco at your evaluation script and let it evolve the best code variations for you:

The Next Generation of AIDE ML - Now in the Weco Platform

Outperforming competitors with systematic iteration and optimization focused on measurable results

OpenAI Benchmarked

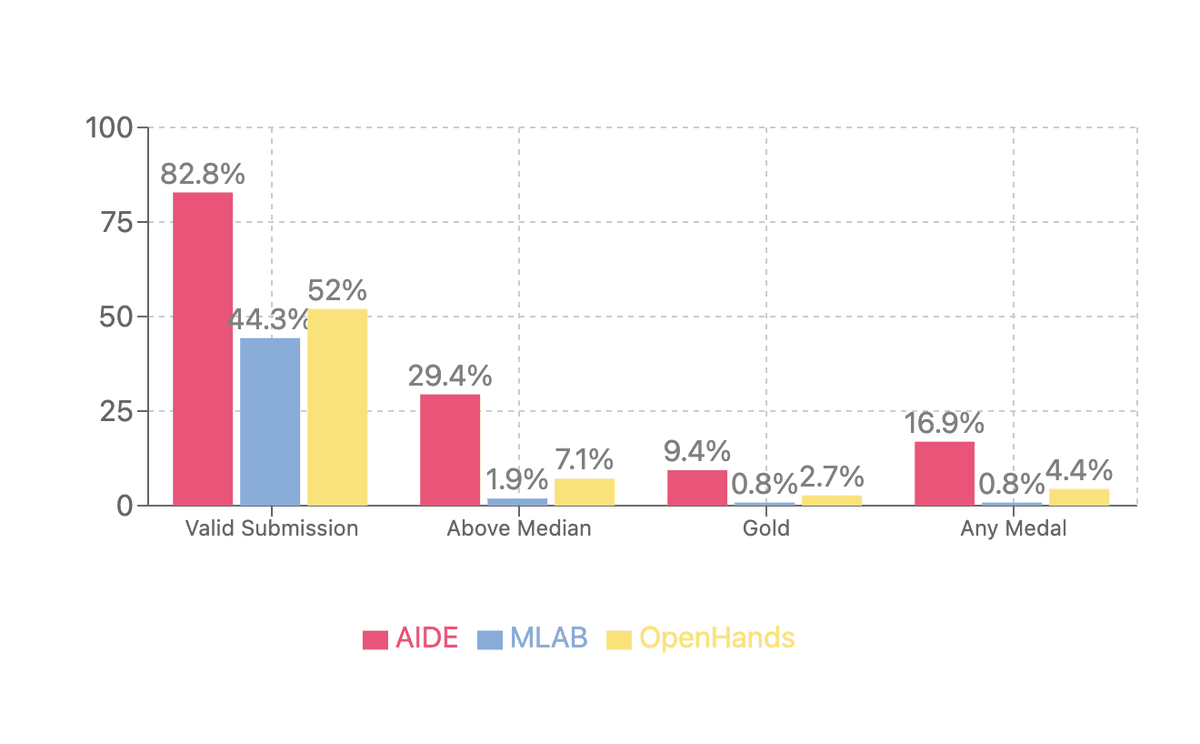

Metric-first engineering that wins more medals on MLE‑Bench:

AIDE ML iterates until the metric says "better." In OpenAI's MLE‑Bench it secured 4× more medals than the next best autonomous agent across 75 Kaggle competitions.

An explicit evaluation loop beats one‑shot code generation.

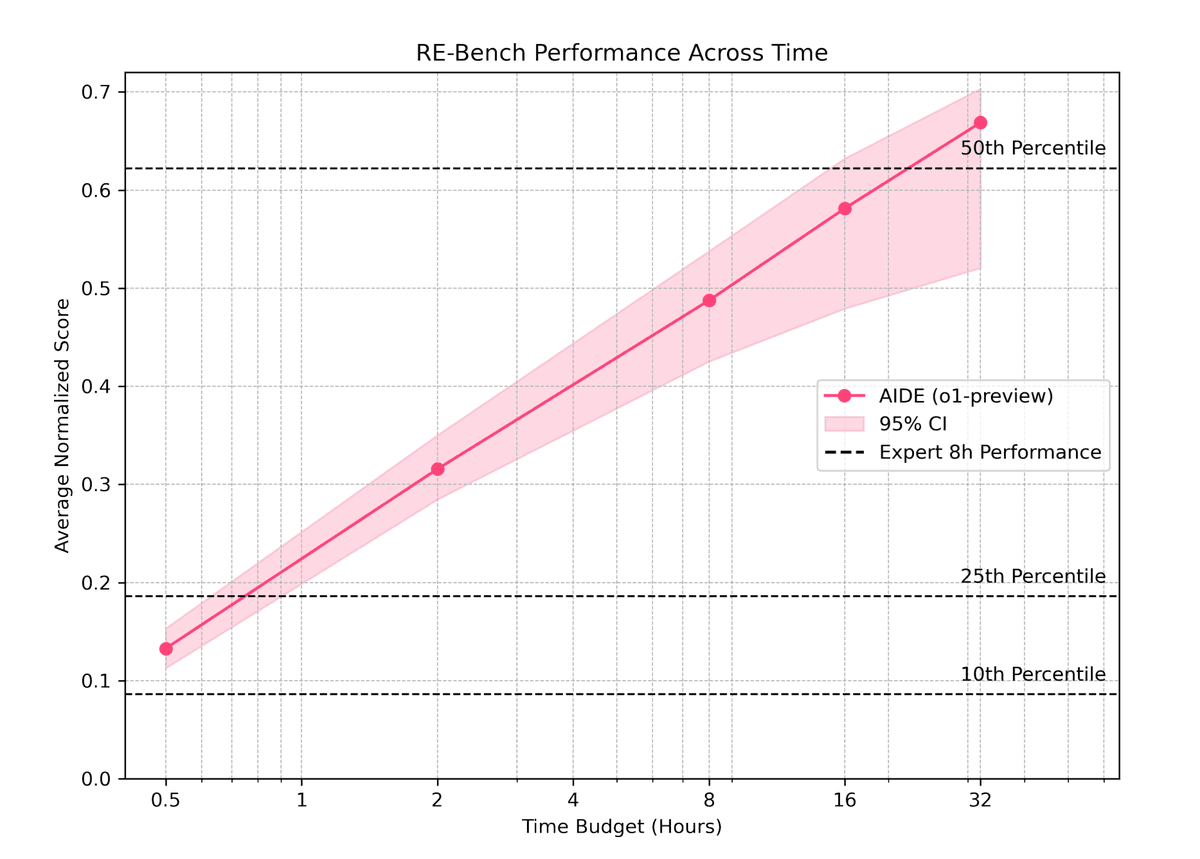

Beyond Human Baselines

Consistently surpassing experts on tight time‑boxed research tasks:

In METR's 6‑hour RE‑Bench challenge, AIDE consistently outperformed seasoned researchers, surfacing "surprising" solutions humans missed.

Open‑Source & Live Now

Use the best version of AIDE ML on the Weco Platform today:

AIDE's core is open‑source — explore the repo or read the paper to dive deeper into our approach.

The Weco Platform is live. Install the CLI with pip install weco, run it against your evaluation script, and watch every experiment stream to the Dashboard in real time. Want a voice in new features? Join the newsletter.

Evolve Beyond Back-and-Forth

Copilot editors require constant babysitting. Weco just works until it's done. It fires off hundreds of targeted experiments, loops each success or miss into a live tree search, and brings fresh variants until your chosen metric climbs - then does it again. Continuous, automatic, relentless:

Choose Your Path

Weco AIDE ML

- Reference implementation of the AIDE algorithm for experimentation

- Only requires a dataset - auto-detects metrics and optimization direction

- Single-machine experiments - runs fully local

- Reproduce paper results and test new agent architectures

- Ideal for academics and rapid prototyping

Weco Platform

20 credits free (≈ 100 steps)

- Massively upgraded AIDE - production-hardened with advanced capabilities

- Works with your evaluation scripts for complex optimization

- Dashboard for experiment tracking and real-time steerability

- Multi-node orchestration - scale from dozens to thousands of workers

- Hybrid architecture - your code stays local, agent runs in cloud

Both options leverage our breakthrough AIDE algorithm for autonomous code improvement.

Frequently Asked Questions

“So amazing to see something built by this team that's substantially underpinning and influencing OpenAI’s agentic roadmap.”

Ready to Transform Your ML Workflow?

Join ML engineers who've already discovered the power of evaluation-driven optimization. Start automating your experiments today: